PACER Parser: Observations, Warnings, and Advice

Here at SCALES, one of our cardinal rules is "never make assumptions about PACER." In theory, the docket sheets stored in PACER, the federal judiciary's public-access record-keeping system, are as clean a source of data as any researcher could hope for: they are highly structured, adhere to a consistent format, and provide a remarkably thorough record of litigation events and case metadata. However, as we've built a parsing pipeline to convert docket sheets from raw HTML into database-ready JSON objects, we've found that the PACER platform — while indeed showing enormous promise as a driver of large-scale legal research — also suffers from a host of shortfalls, quirks, and inconsistencies.

In this guide, we'll describe some of these peculiarities and offer suggestions for working around them, whether you're using our already-parsed case data corpus or running our parser on docket sheets of your own. PACER may be an unpredictable creature, but with the proper analytical strategies at your disposal, we're confident that you'll find it to be a wellspring of insights into the U.S. federal judiciary.

This guide assumes some basic familiarity with the JSON schema used by our PACER parser. For a detailed description of the schema, see the parser documentation.

How Do I Use This Parse?

It only takes a glance at a docket sheet to recognize that the PACER system was designed for attorneys, not researchers. Want to know the phone and fax numbers for a plaintiff's counsel? No problem! Simply interested in whether the plaintiff's claims were settled? You might be in for a long hunt. SCALES's parser does make PACER more analysis-friendly by pulling names, dates, header information, and other pertinent content out of the docket sheets and into orderly JSON trees, but you may still need to develop some search and cleaning routines of your own in order to coax PACER's lawyer-oriented data structure into a form more amenable to broad investigation.

For example, let's say you're interested in determining how many litigants in your district applied for fee waivers in a given year. You might start by perusing the easiest-to-use section of SCALES's JSON schema: the header fields, where top-level data about a case and its parties are stored after being pulled out of the relatively straightforward HTML tables atop each docket sheet:

These fields do provide some basic case facts — like filing date, nature of suit, and (in the unredacted data) lawyer names and litigant pro-se status — but they don't capture much detail below the surface. How about SCALES's 'flags' field, which records the keywords that PACER displays in the top right corner of the docket sheet for convenient filtering of cases?

Some IFP-related flags do occasionally turn up here, but the flag system not only varies widely from district to district but is also applied with very little consistency, and in our experience it's not a suitable basis for research work.

Sooner or later, your fee waiver search will probably bring you to the main attraction of PACER docket sheets: the docket table, a play-by-play account of the case history in which each row describes a particular action taken in court:

Because a fee waiver must be requested via a motion to proceed in forma pauperis and granted or denied by a judge's order, you can expect that the vast majority of fee waivers will leave a footprint in this section of the docket sheet (see e.g. rows 2 and 3 of the table above). How should you go about locating this footprint? You could choose a sensible search term such as "in forma pauperis" and identify all cases whose SCALES 'docket' field contains a match, but given the multitude of district- and clerk-specific docketing conventions, this strategy runs the risk of being both too broad (flagging red herrings like "no motion to proceed in forma pauperis was received") and too narrow (missing outliers like "motion to proceed without prepayment of fees"). Thus, a more fruitful strategy might involve manually reviewing all fee-waiver-related language that appears in the dataset, and then writing some regular expressions to encapsulate the full range of that language when searching docket entries for fee waiver activity. Although this approach may seem overly elaborate, especially given the simplicity of the underlying research question, the inconvenient reality of PACER research is that simple approaches are rarely successful!

The general approach suggested here — that is, starting with human exploration of docket sheets that relate to the topic at hand, and using those observations to craft regex searches or other transformation functions that take JSON docket data and winnow it down to the desired format — can easily translate to a number of potential areas of investigation, from lawsuit outcomes to criminal sentencing to trends in judge and party behavior. It's also worth noting that the SCALES team is hard at work conducting these investigations ourselves, with the end goal of developing a library of docket labels, annotations, and interpretations that can save individual researchers from having to reinvent the wheel. In fact, we've already written a detailed script that detects in forma pauperis activity using the procedure outlined above, and you'll soon be able to use the resulting IFP labels when conducting research with SCALES's Satyrn software.

Stubs, Filing Errors, and Other Junk

For the most part, awkwardly formatted docket sheets that are difficult to apply in the context of certain research are still useful data sources on the whole. Sadly, though, certain docket sheets are almost entirely bereft of helpful information. Whether they were opened due to a clerical error, transferred or terminated before any meaningful data were input into PACER, or so redacted as to be unreadable, these junk cases have little utility in many research applications, and you may want to take some extra measures to prevent such cases from polluting your large-scale analytical work.

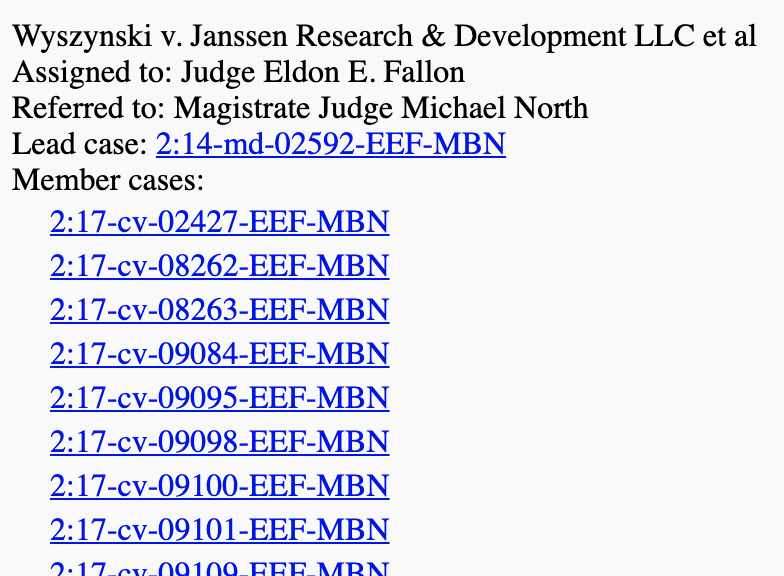

A case in point: When running an early version of our parser on the set of all cases filed in 2016, we noticed that runtimes were taking a significant hit in the Eastern District of Louisiana. Upon opening a few of the district's unusually large HTML files, we saw barely any case activity, but we also found thousands of lines of case numbers corresponding to lawsuits in a single multi-district litigation:

As it turned out, all the troublesome Louisiana docket sheets were part of a massive wave of filings against the manufacturers of the blood thinner Xarelto. In fact, of the 17,060 civil cases opened in the Eastern District of Louisiana in 2016, some 8,948 of them (52%) were opened as part of that single MDL! While the Xarelto litigation is important in its own right, these stub cases are not particularly representative of broader trends in federal court activity, and we have occasionally chosen to exclude them from subsequent studies and case counts.

Of course, the specific hobgoblins affecting case aggregation vary according to the work at hand; a junk case in one context may be a valuable datapoint in another. Nevertheless, there are some basic checks that can provide a good starting point for detecting case-level noise regardless of the research scenario. The SCALES 'filed_in_error_text' field captures header lines that suggest a case was opened erroneously, such as "Incorrectly Filed," "Not Used," and "Do Not Docket." Parties listed as "Suppressed," or docket entries that use language like "suppressed" or "sealed," can indicate that some data have been removed from the docket sheet due to sealing orders (although these indicators will not reveal the full extent of court-ordered sealing activity, as some sealed cases are hidden from the PACER website altogether). And docket tables containing only a few short docket entries might be a sign that the court considered the litigation to be frivolous, or that the real action is taking place elsewhere (as in cases that were transferred to another district or remanded to a state court).

Where's All the Action?

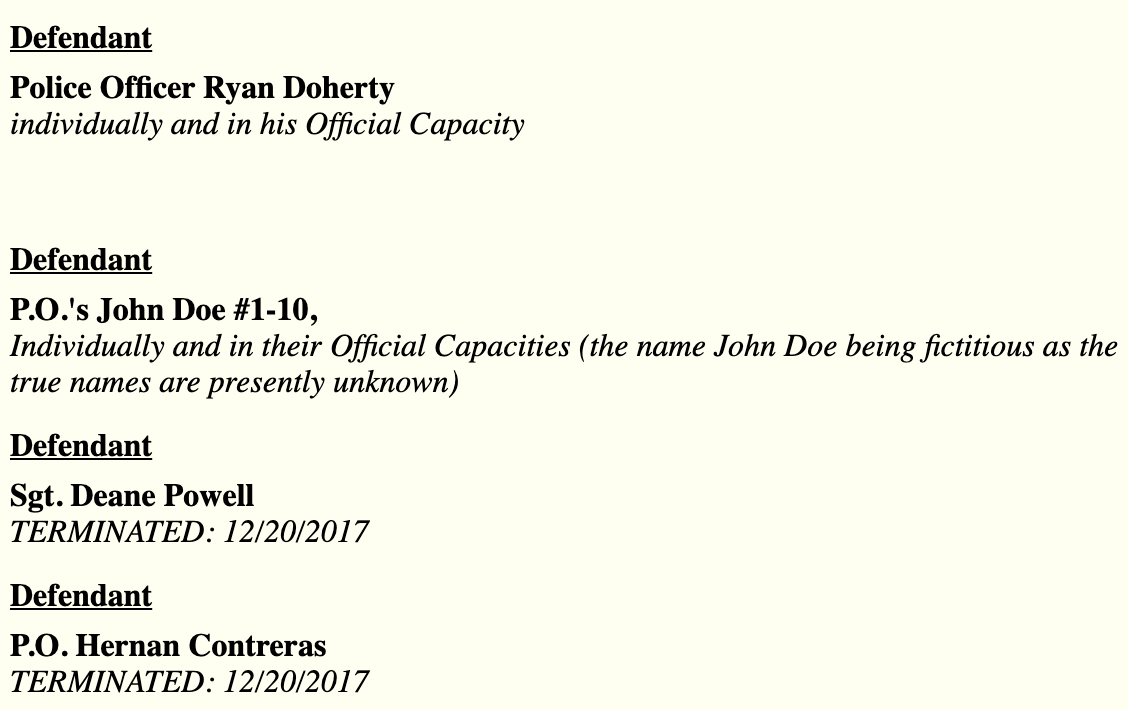

Even when your PACER dataset is free of all the aforementioned pitfalls — no stub cases, no auto-populated data errors, verbose docket entries easily searchable via regex — certain questions may still seem impenetrable. At SCALES, we run into these sorts of questions all the time, especially when partners come to us with domain-specific inquiries like "How many defendants in police misconduct cases pursue an immunity defense?" or "Which branches of medicine do malpractice defendants most frequently work in?" Often, targeted research questions are difficult to pursue using PACER because the answers reside not in the docket sheet itself but rather in the case documents attached to the docket sheet. For instance, imagine we are investigating the police misconduct question, and we come across a case whose defendants are police officers:

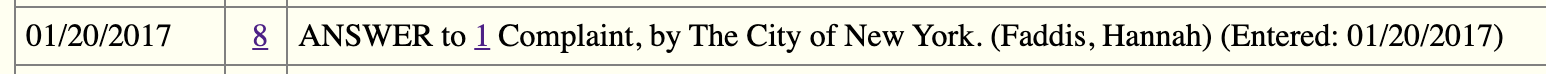

As in most docket sheets, the docket entries go on to list all the noteworthy episodes in the lifetime of the case (motions, orders, status hearings, trial proceedings), but regrettably, they don't describe what happened in those episodes. In order to resolve the question "Did the defendants invoke an immunity defense?", we have to find the docket entry noting the defendants' response to the plaintiff's complaint, and retrieve the link to the PDF document attached to that docket entry:

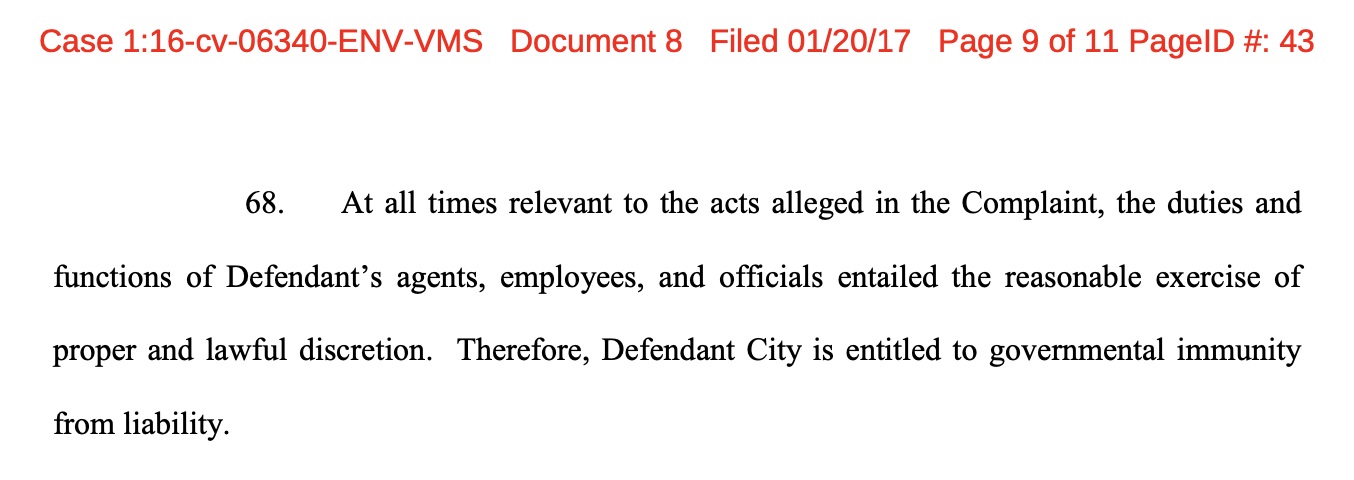

It's only after purchasing the document, which in this case is a scan of the initial filing that the defendants submitted to the court clerk, that we can find the answer we've been looking for:

Not only does this approach require a sizable budget (as we discuss more extensively in the next section), but it is also tricky to implement at scale. Manual review of case documents is labor- and time-intensive, and automated document parsing (a challenging task even with state-of-the-art OCR technology) is beyond the scope of the current generation of SCALES software.

Yet these limitations do not mean all is lost; even when the docket-document divide conceals information that seems crucial to your research effort, PACER documents may still provide vital hints about what to do next. A few examples: In some of our work with criminal convictions, statute citations that we assumed to be locked away in charging documents were actually accessible via "case summaries," a little-known PACER feature described in more detail in the scraper documentation. In situations where we've needed a glimpse into complaint documents, the 'raw_info' field in SCALES parties' 'entity_info' dictionaries, which is derived from the italicized subheadings attached to PACER party names, has proven helpful given that it often reproduces the self-descriptions provided by litigants in their initial filings. And for studies like the qualified immunity example above, SCALES's ongoing docket ontology project — in which we're training transformer models to predict which docket entries in a case correspond to which phases of that case's lifetime (opening, early dismissal, settlement, judgment...) — may provide a way to zero in on a few documents of interest per case, thereby drastically reducing the overhead associated with mining data from documents. In all of these instances, PACER's perennial unpredictability, so often a hindrance to effective research, provides an unexpected boon in the form of valuable data hidden in the nooks and crannies of docket sheets.

Epilogue: The PACER Fee Puzzle

Up to this point, we've mostly glossed over the problem of minimizing download fees when conducting research using PACER. In part, this is because our parser can't solve this problem; smart usage of our JSON schema can't ameliorate high data costs to the same degree that, say, leveraging the 'filed_in_error_text' field can help reduce noise in a PACER dataset. What's more, we're hopeful that the fee puzzle might be solved for good in just a few years, thanks to a bipartisan proposal to eliminate PACER fees that's currently advancing through Congress. Still, since download fees are arguably a greater roadblock to legal research than any of the PACER shortfalls mentioned thus far, it's worth discussing some ways of minimizing their impact on your work.

One strategy for lowering costs is to take advantage of already-existing repositories of free legal data. For example, the Federal Judicial Commission's Integrated Data Base (IDB) compiles metadata about federal court cases into publicly available datasets, and the Free Law Project's RECAP initiative allows users to upload their PACER purchases to a central archive where other RECAP users can access them for free. However, the IDB lacks much of the depth provided by docket sheets and often slips out of sync with PACER, while the RECAP database only stores the dockets and documents that its users interact with and can't elucidate any trends across entire districts or years. Thus, although these sources are wonderful starting points for data exploration and valuable contributions to the broader movement towards judicial data transparency, they are limited in their potential for engendering robust research.

Fortunately, if you decide to use PACER downloads for your research, you may be able to trim costs by way of intelligent downloading practice. Understanding the peculiarities of PACER's search functionality can help you hone in on the cases you need and eliminate the expenses associated with overly broad search queries. Certain PACER menu options may allow you to exclude unwanted information from the docket sheets you request, thereby reducing the total number of docket pages you pay for. And the documentation for the SCALES scraper contains some additional tips and command-line options for lowering costs when using our scraping interface.